Provable defense framework for backdoor mitigation in federated learning

10-27-2022

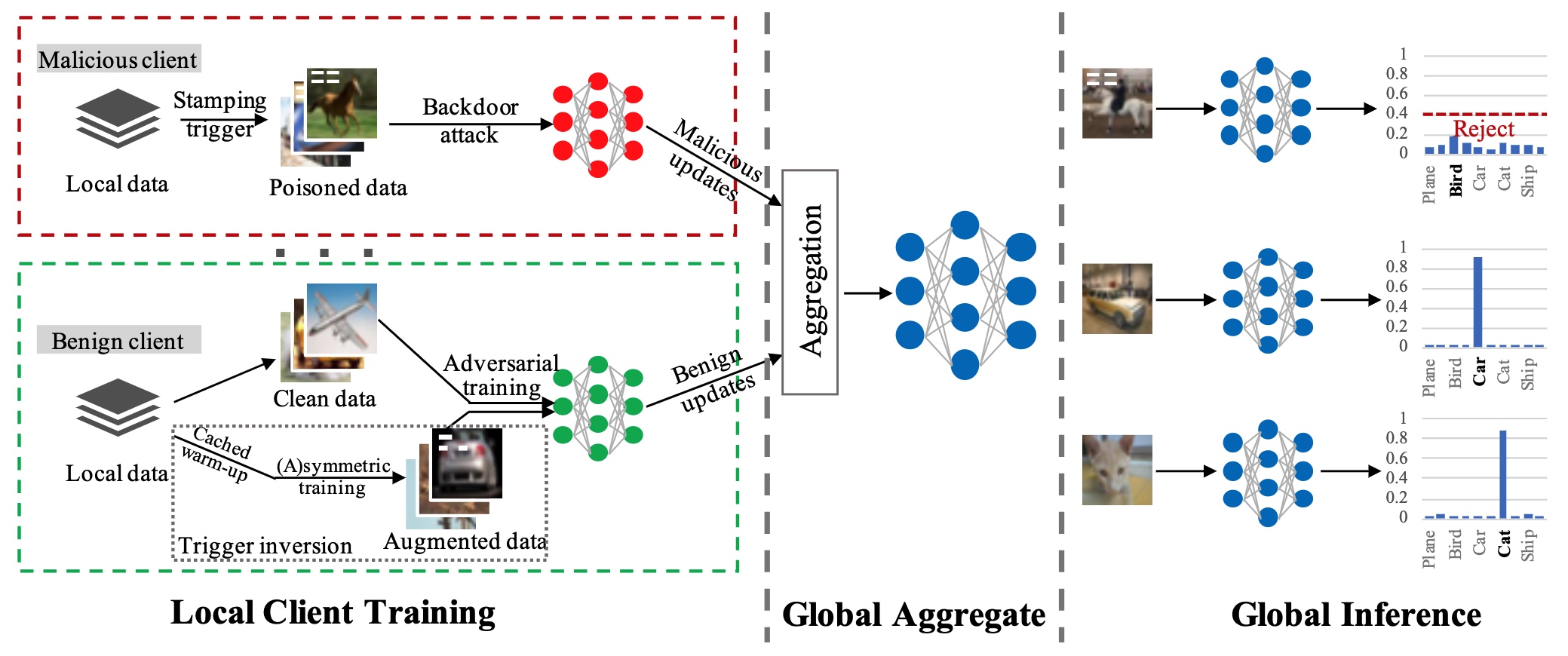

Overview of FLIP. The left upper part (red box) performs the malicious client backdoor attack and the left lower part (green box) illustrates the main steps of benign client model training.

When tasked with creating a robust machine learning application, how do we prevent backdoor attacks while using Federated Learning?

The researchers proposed FLIP – a Federated LearnIng Provable defense framework (FLIP) equipped with a theoretical guarantee to protect from compromised devices creating backdoor attacks.

Federated learning (FL), the machine learning technique that trains algorithms using user-sensitive data on local clients and devices, is an approach to build a common, robust machine learning model while preserving data privacy and security.

Researchers at Purdue University, IBM Research and Rutgers University addressed a known security problem with FL as an emerging distributed learning paradigm. Due to the decentralized nature of FL, recent studies demonstrate that individual devices may be compromised and become susceptible to backdoor attacks.

For their work, Professor Xiangyu Zhang, and PhD students: Kaiyuan Zhang, Guanhong Tao, Qiuling Xu, Siyuan Cheng, Shengwei An, Yingqi Liu, and Shiwei Feng from Purdue University’s Department of Computer Science, Dr. Pin-Yu Chen from IBM Research, and, Professor Shiqing Ma from Rutgers University were awarded best paper at the 2022 European Conference on Computer Vision (ECCV 2022) AROW Workshop in October 2022 for FLIP: A Provable Defense Framework for Backdoor Mitigation in Federated Learning.

Since FL local model training is private, attackers could hijack local clients and inject backdoor attacks into a global aggregated model. To defend against FL backdoor attacks, a number of defenses have been proposed focusing on robust aggregation, robust learning rate method, or detecting abnormal gradients update.The researchers proposed FLIP – a Federated LearnIng Provable defense framework (FLIP) equipped with a theoretical guarantee to protect from compromised devices creating backdoor attacks.

"We introduced a novel provable defense framework for mitigating backdoor threats in Federated Learning,” said PhD student, Kaiyuan Zhang. He added, “The key insight is to combine trigger inversion techniques with FL training. As long as the inverted trigger satisfies our given constraint, we can guarantee the attack success rate will decrease and in the meantime, the prediction accuracy of the global model on clean data can be retained.”

For each benign local client, FLIP adversarially trains the local model on generated backdoor triggers that can cause misclassification. Once local weights are aggregated in the global server, the injected backdoor features in the aggregated global model will be mitigated by the benign clients’ hardening. Therefore, FLIP can reduce the prediction confidence for backdoor samples from high to low.

On top of the framework, the researchers provide a theoretical analysis of how the training on a benign client can affect a malicious local client as well as the global model, which has not been studied in the literature. The theoretical analysis demonstrates that their method ensures a deterministic loss elevation on backdoor samples with only slight loss variation on clean samples. The researchers also conduct comprehensive experiments across different datasets and attack settings, showing the empirical superiority of their method by comparing with eight competing SOTA defense techniques.

FLIP: A Provable Defense Framework for Backdoor Mitigation in Federated Learning

Kaiyuan Zhang1, Guanhong Tao1, Qiuling Xu1, Siyuan Cheng1, Shengwei An1, Yingqi Liu1, Shiwei Feng1, Pin-Yu Chen2, Shiqing Ma3, Xiangyu Zhang1

1Purdue University, 2IBM Research, 3Rutgers University

Abstract

Federated Learning (FL) is a distributed learning paradigm that enables different parties to train a model together for better quality and strong privacy protection. In this scenario, individual participants may get compromised and perform backdoor attacks by poisoning the data (or gradients). Existing work on robust aggregation and certified FL robustness does not study how hardening benign clients can affect the global model (and the malicious clients). In this work, we theoretically analyze the connection among cross-entropy loss, attack success rate, and clean accuracy in this setting. Moreover, we propose a trigger reverse-engineering-based defense and show that our method can provide a guaranteed robustness increase (i.e., lower the attack success rate) without affecting benign accuracy. We conduct comprehensive experiments across different datasets and attack settings. Our results on eight competing SOTA defenses show the empirical superiority of our method on both single-shot and continuous FL backdoor attacks.

About the Department of Computer Science at Purdue University

Founded in 1962, the Department of Computer Science was created to be an innovative base of knowledge in the emerging field of computing as the first degree-awarding program in the United States. The department continues to advance the computer science industry through research. US News & Reports ranks Purdue CS #20 and #16 overall in graduate and undergraduate programs respectively, seventh in cybersecurity, 10th in software engineering, 13th in programming languages, data analytics, and computer systems, and 19th in artificial intelligence. Graduates of the program are able to solve complex and challenging problems in many fields. Our consistent success in an ever-changing landscape is reflected in the record undergraduate enrollment, increased faculty hiring, innovative research projects, and the creation of new academic programs. The increasing centrality of computer science in academic disciplines and society, and new research activities - centered around data science, artificial intelligence, programming languages, theoretical computer science, machine learning, and cybersecurity - are the future focus of the department. cs.purdue.edu

Writer: Emily Kinsell, emily@purdue.edu

Sources: Kaiyuan Zhang, zhan4057@purdue.edu

Guanhong Tao, taog@purdue.edu