Fake News: Thoughts from Dan Goldwasser

11-27-2017

Writer(s): Dan Goldwasser, Assistant Professor of Computer Science

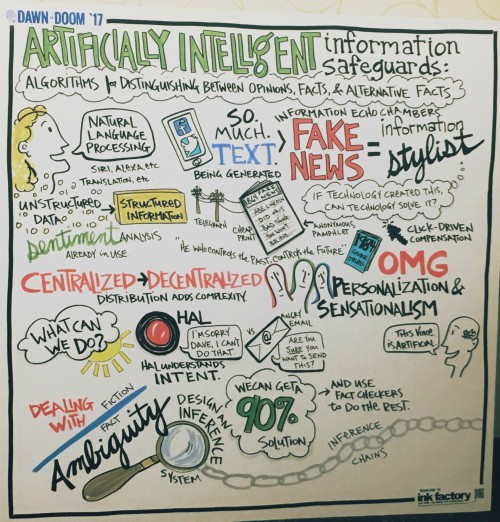

The term “fake news,” which became popular during the 2016 presidential election, refers to propagation of false, or at least highly biased, information on social media. During the election, fake news had an unbelievable reach, eliciting reactions on social media from both sides of the political spectrum.

Instead of calling it fake news, perhaps the term “information styling” is more appropriate. Similarly, the authors of fake news stories can be referred to as “information stylists,” as they create stories that – although false – are carefully designed around our preconceived notions to help garner support for their stance. Fake news isn’t random – there is an invisible hand that carefully shapes and thinks about this content.

Fake news isn’t new

While the term “fake news” and its wide dissemination through social media are recent, the phenomenon of spreading false information to influence political outcomes is not. In fact, it can be traced back to the heated presidential race of 1864 during Lincoln’s reelection campaign. Similar to the way the Internet has helped fake news spread in recent history, the technological advances of the time (such as cheap mass print and the electronic telegraph) played a role in spreading the fake news of 1864.

While fake news isn’t a new concept, today’s technology has exacerbated the problem. Over the last decade, journalism has changed dramatically, both in the way information is distributed and the way we consume it. Twenty years ago, we relied on a few central sources of information to provide verified content, which was distributed through a physical paper. This has changed dramatically, as we have even moved beyond consuming news from many news websites to consuming it on social websites, where each user can potentially act as a distribution channel. Today, news is just a part of our experience on social media.

Social media is an echo chamber

Unlike traditional newspapers and even news websites, social networks do not passively display information. Instead, the information presented to us is contextualized by the social information collected about us. The content is personalized using algorithms designed to identify what kind of information each individual will like and engage with. It is reactive to our social environment, resulting in an echo chamber in which our own biases are repeatedly confirmed. Plus, social media websites are designed to increase user engagement, rather than present a diversity of opinion. The content displayed to us is chosen for its potential to invoke reactions from users and to encourage them to share the content with their friends.

We aren’t as smart as we used to be – or at least we don’t think as critically

While it’s clear that these websites adapt to us, we also adapt to them. Over time, the fact that content is optimized for increasing engagement has an influence on us. In order to deal with the overwhelming amount of information, we prefer simplified content, releasing us from the need to think critically about facts, figures and long arguments derived from them.

Can AI solve the problem?

To a large extent, recent AI advances led to the decline of the old information safeguards, which naturally leads to the question of whether we can we build artificially intelligent information safeguards to help us navigate around fake news.

Machine learning, which is responsible for many of the recent success stories in AI, is a promising direction. A machine learning algorithm learns to automatically identify patterns in data and categorize instances based on these patterns. For example, given a collection of product reviews, it will learn to categorize the reviews into positive and negative reviews using relevant patterns like words and short phrases that indicate customer satisfaction.

But recognizing fake news is highly challenging and can’t be captured by simple patterns, as news continuously changes. Distinguishing between false and real information requires a deeper understanding of the world and the biases of potential readers. This is not something that can be easily represented in the data, but there are several promising directions.

First, we should rethink the problem definition as a purely technological problem, and construct models that can help human decision making processes, rather than replacing them. Second, we should find new data abstractions that capture the connections between textual and social information. Finally, and most importantly, we, as users of those platforms, should take an active role – not just sharing information with our friends, but also thinking critically about it and recognizing the risks and our responsibilities in this new era.