Fairness in Machine Learning: Purdue joins $1M NSF fairness in AI program

03-04-2021

It’s important to remember that fairness in artificial intelligence (AI) starts and ends with people. A significant research effort across the industry has been exerted to address the immediacy of AI biases. Methods are often proposed to enforce fairness constraints that can create a more equal AI in a one-shot setting. Less is known about consequences of AI bias that go on longer, such as the long-term impacts on people as they are caught up in multiple rounds of AI decision making. Since the effect of this long-term loop of AI decision making is largely unknown, the project will study the accumulated utility and welfare of people over time after many rounds of AI interaction. What can we do to reduce the impact of biases and aim to understand the long-term impacts?

To find out, Purdue’s Department of Computer Science has joined a collaborative $1M award from the National Science Foundation (NSF) Program on Fairness in Artificial Intelligence. Created with funding from NSF and Amazon, the project specifically explores fairness in the realms of automated machine learning and artificial intelligence.

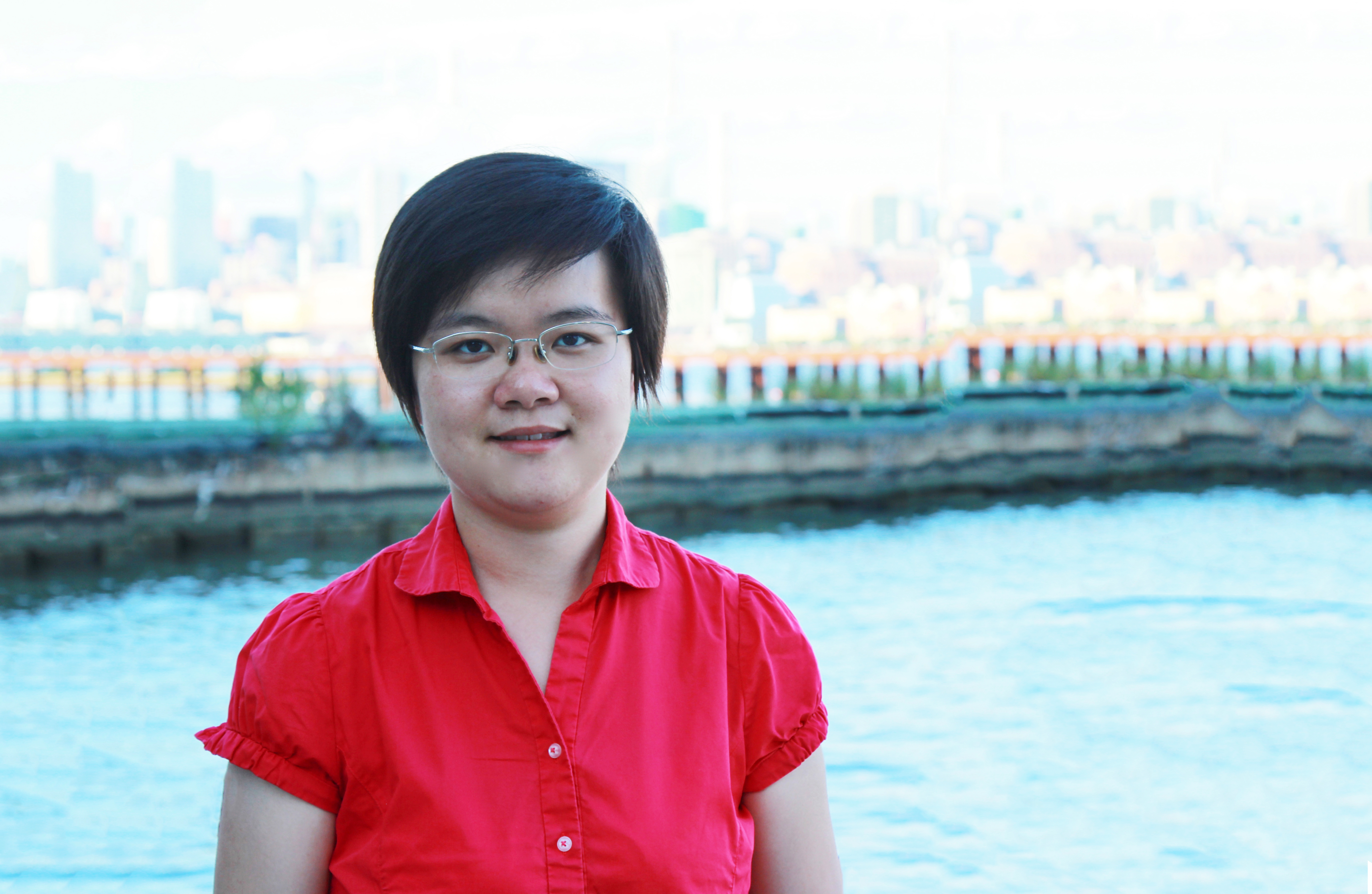

This new project co-lead by AI researcher, Professor Ming Yin, and her team,received funding for their project, “Fairness in Machine Learning with Human in the Loop,” to identify and mitigate bias in AI and machine learning systems to achieve long-lasting equitable outcomes.

Yin states, “Many research efforts have been carried out to address this challenge by enforcing fairness constraints and creating a fairer AI in a one-shot setting.” She added, “Much less attention has been paid on understanding fairness in AI from a long-term point of view, considering the feedback loop from humans as AI makes decisions repeatedly over time.”

The project aims to understand the long-term impact of fair algorithmic decision making and use such understandings to design ways for promoting long-term equitable outcomes.

A question the research will tackle is, how do people of different subgroups react to AI's fair/unfair decisions? Also, how would those reactions further impact the kind of data AI has access to, the fairness of AI decisions, and the welfare of people in the long run?

Yin is co-leading a team on the FAI project, “Fairness in Machine Learning with Human in the Loop,” alongside Professor Yang Liu from the University of California Santa Cruz (lead), Professor Mingyan Liu from the University of Michigan, and Professor Parinaz Naghizadeh from Ohio State University.

Abstract

Despite early successes and significant potential, algorithmic decision-making systems often inherit and encode biases that exist in the training data and/or the training process. It is thus important to understand the consequences of deploying and using machine learning models and provide algorithmic treatments to ensure that such techniques will ultimately serve the social good. While recent works have looked into the fairness issues in AI concerning the short-term measures, the long-term consequences and impacts of automated decision making remain unclear. The understanding of the long-term impact of a fair decision provides guidelines to policy-makers when deploying an algorithmic model in a dynamic environment and is critical to its trustworthiness and adoption. It will also drive the design of algorithms with an eye toward the welfare of both the makers and the users of these algorithms, with an ultimate goal of achieving more equitable outcomes.

This project aims to understand the long-term impact of fair decisions made by automated machine learning algorithms via establishing an analytical, algorithmic, and experimental framework that captures the sequential learning and decision process, the actions and dynamics of the underlying user population, and its welfare. This knowledge will help design the right fairness criteria and intervention mechanisms throughout the life cycle of the decision-action loop to ensure long-term equitable outcomes. Central to this project’s intellectual inquiry is the focus on human in the loop, i.e., an AI-human feedback loop with automated decision-making that involves human participation. Our focus on the long-term impacts of fair algorithmic decision-making while explicitly modeling and incorporating human agents in the loop provides a theoretically rigorous framework to understand how an algorithmic decision-maker fares in the foreseeable future.