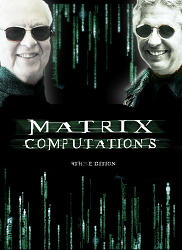

Matrix Computations

David Gleich

Purdue University

Fall 2023

Course number CS-51500

Tuesday-Thursday 1:30-2:45pm. Hampton 2123

Common matrix structures

We now illuminate some of the relationships between matrix computations and linear algebra.

Why is this stuff important? The important bit is the concept of the rank of a matrix. This gives the dimension of the vector-space associated with the matrix. So it's worth reviewing up to the point of rank.

Sets of vectors

Linearly independent A set of vectors in is called linearly independent if imples all .

Examples The vectors and are linearly independent. This can be verified by showing that the system of equations: only has the solution . However, the vectors and are not linearly independent because .

As a matrix The property of being linearly independent is easy to state as a matrix. Suppose that is an matrix where is the th column: Then the set of vectors is linearly independent if implies that .

Span (not spam) The span of a set of vectors is the set of all linear combinations.

Subspaces

Defining a vector spaces is best left to Wikipedia:

Suffice it to say that that the set is a vector-space with the field of real-numbers as scalars.

A subset is called a subspace if it also satisfies the properties of being a vector-space itself.

Example Let for some vector . Then is a subspace of .

Spans and subspaces The example we just saw shows that , the span of a single vector, is a subspace. This is true in general: is a subspace.

Linearly independent spans Let be linearly independent. Then for , there exists a unique set of 's such that . As a matrix, this is saying that the system of equations: has a unique solution where

Subspaces to bases and dimensions For any subspace , we can find always find a set of linearly independent vectors such that . We call any such set a basis for the subspace .

IMPORTANT Any basis for a subspace always has the same number of vectors. Thus, the number of vectors in a subspace is a unique property of a vector space and is the dimension of the vector-space.

This ends our discussion of subspaces. Now we'll see how we can use subspaces to discuss matrices

Matrices to subspaces

Given a matrix .

Range The range of a matrix is the subspace: Note that So the range is just one particular span of a set of vectors.

Rank

Perhaps the most important thing in these notes is the concept of rank. At this point, rank is simple.

That is, the rank of is the dimension of the subspace given by the range of . This property is fundamentally important.

For instance, if with and , then we know that has a set of linearly independent column vectors!

Example Here's where we can use some of our matrix algebra to prove a statement. Let be an permutation matrix. Show that .

Proof Sketch A permutation matrix just reorders the columns of the matrix. This won't change anything in the range of . So the set of vectors in the range of won't change. Thus, the dimension of that vector space won't change.

Key question How do we compute rank?

Answer Use a matrix decomposition!

Useful matrix decompositions

Let be a matrix. The following are matrix decompositions exist for any matrix:

-

where is and orthogonal, and is and upper-triangular.

-

where is and orthogonal, is and orthogonal, and is and diagonal, with diagonal entries . (That is, sorted in decreasing order and non-negative.)

-

where and are permutation matrices and and are lower and upper triangular.

These decompositions expose the rank of a matrix in various ways. For instance, the number of entries on the diagonal of that are non-zero is equal to the rank of the matrix.