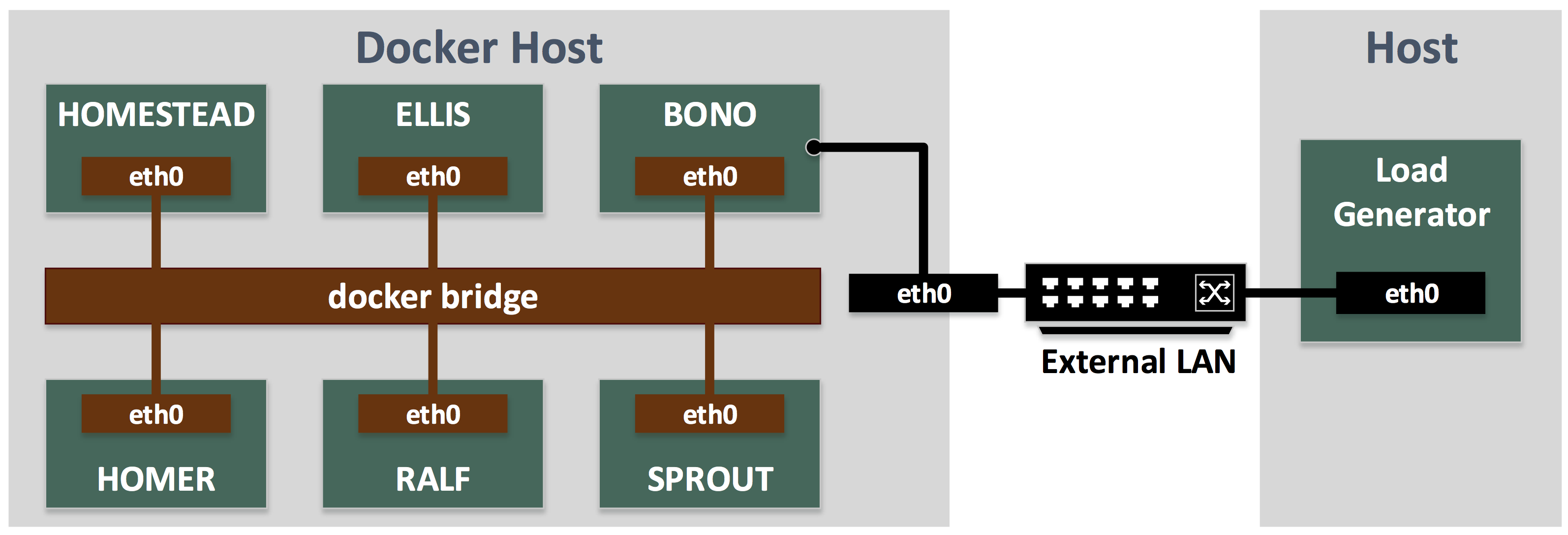

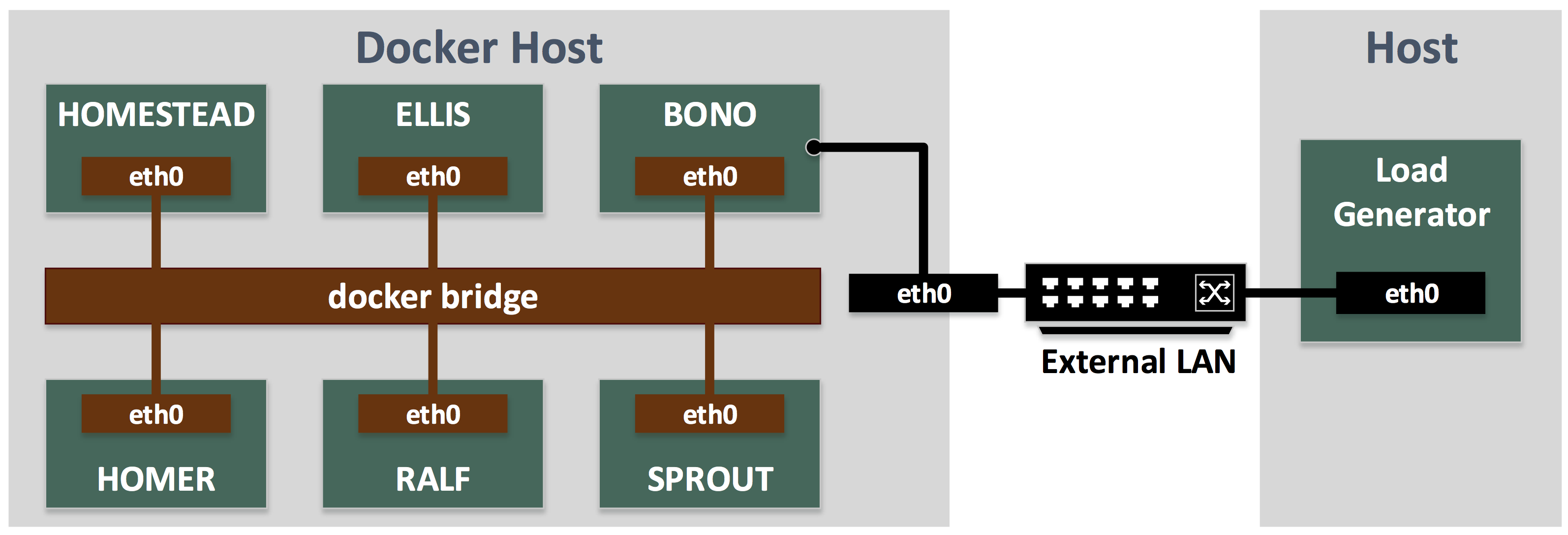

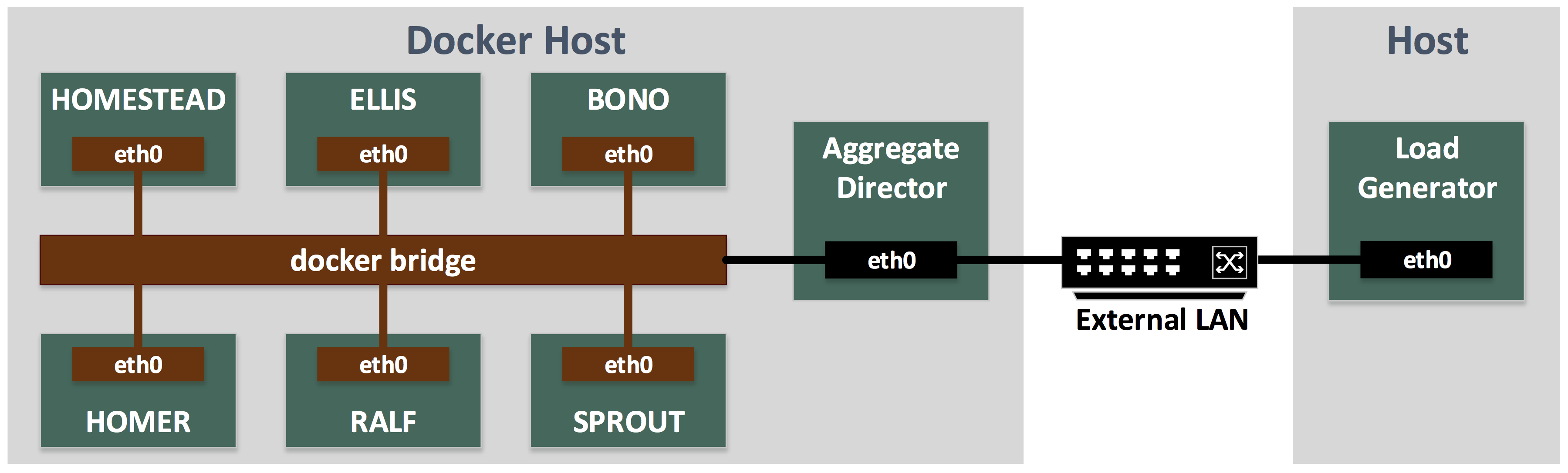

Fig. 1: Clearwater Deployment on testbed

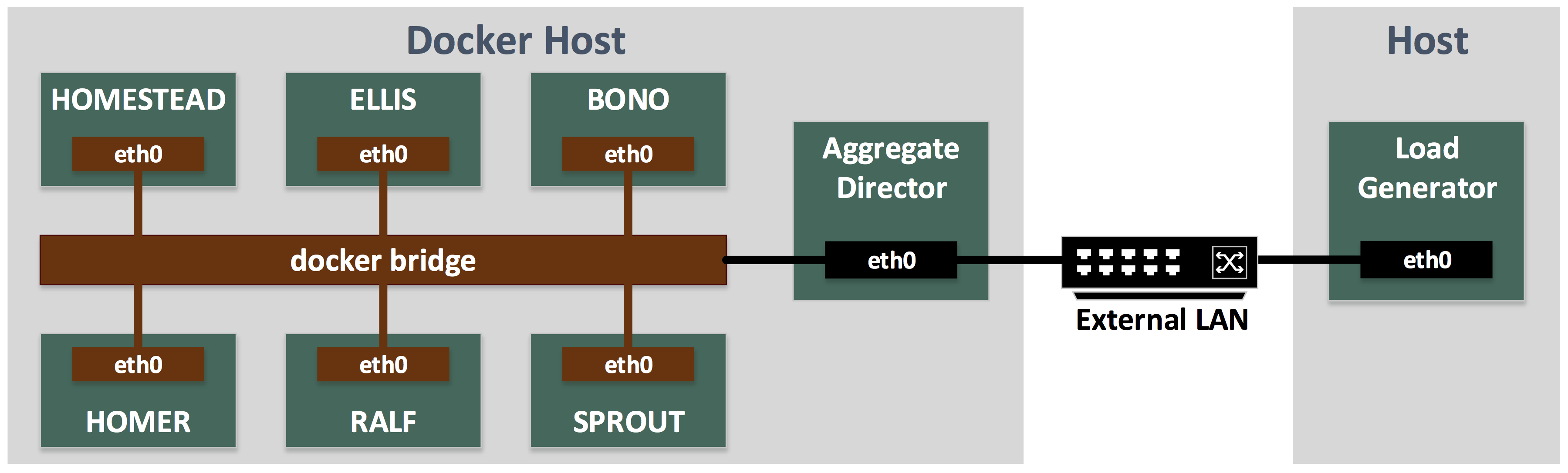

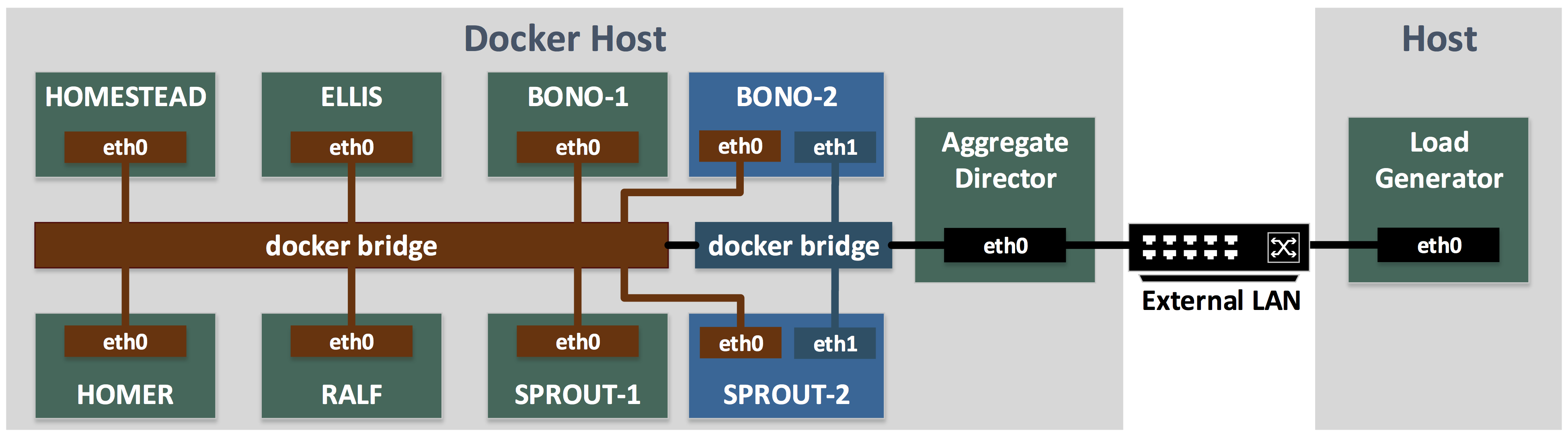

Fig. 2: REGISTER AA on testbed

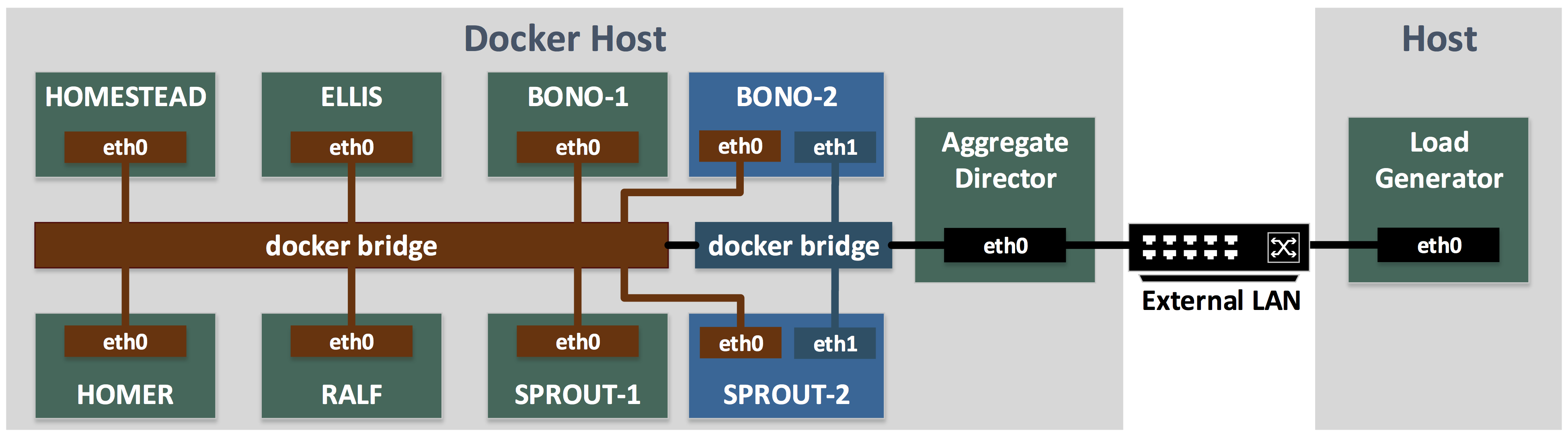

Fig. 3: SUBSCRIBE AA on testbed

Network Functions Virtualization (NFV) has enabled operators to dynamically place and allocate resources for network services to match workload requirements. However, unbounded end-to-end (e2e) latency of Service Function Chains (SFCs) resulting from distributed Virtualized Network Function (VNF) deployments can severely degrade performance. In particular, SFC instantiations with inter-data center links can incur high e2e latencies and Service Level Agreement (SLA) violations. These latencies can trigger timeouts and protocol errors with latency-sensitive operations.

Traditional solutions to reduce e2e latency involve physical deployment of service elements in close proximity. These solutions are, however, no longer viable in the NFV era. In this paper, we present our solution that bounds the e2e latency in SFCs and inter-VNF control message exchanges by creating micro-service aggregates based on the affinity between VNFs. Our system, Contain-ed, dynamically creates and manages affinity aggregates using light-weight virtualization technologies like containers, allowing them to be placed in close proximity and hence bounding the e2e latency. We have applied Contain-ed to the Clearwater IP Multimedia Subsystem and built a proof-of-concept. Our results demonstrate that, by utilizing application and protocol specific knowledge, affinity aggregates can effectively bound SFC delays and significantly reduce protocol errors and service disruptions.

|

|

|

Fig. 1: Clearwater Deployment on testbed

|

Fig. 2: REGISTER AA on testbed

|

Fig. 3: SUBSCRIBE AA on testbed

|

| Component | Functionality | Software |

| Load Generator | Generate SIP Traffic | SIPp |

| Aggregate Director |

Distribute incoming traffic to Affinity Aggregates |

OpenSIPS |

| Affinity Aggregate | Handle incoming messages (REGISTER/SUBSCRIBE) | Clearwater |

There are 3 main components in the testbed: Load Generator: SIPp is used as load generator to generate REGISTER and SUBSCRIBE traffic. SIPp can be used to generate customized SIP traffic using an XML configuration file. Please refer to the SIPp documentation for details. The scripts used to generate the REGISTER workload for our experiments are available here: load-generator scripts. Aggregate Director: We use OpenSIPS dispatcher module to provide the functionality of the Aggregate Director. Please refer to the OpenSIPS documentation for instructions to install and configure the OpenSIPS dispatcher module. OpenSIPS must be configured to ensure that the REGISTER and SUBSCRIBE messages are routed to appropriate Affinity Aggregates. A sample of the OpenSIPS config file used in our experiments is available here: dispatcher config file. Affinity Aggregate: For our experiments, we use the containers provided by Clearwater. Please refer to the Clearwater documentation for detailed instructions on how to deploy Clearwater. The configuration files used for deploying Clearwater and the scripts used for creating the Contain-ed deployment are available here: contain-ed server scripts.