Computational Methods in Optimization

David Gleich

Purdue University

Spring 2023

Course number CS-52000

Monday, Wednesday, Friday, 1:30-2:20pm

Location Lawson 1106

Course project

The course project is worth 30% of your final grade.

It will involve a statement of choice on

- March 27th extended to March 30th based on original typo.

and then weekly reports on

- April 3rd

- April 10th

- April 17th

The final project is due May 1st.

These three reports are subject to the same late policy as homework (i.e., no late points until Monday at 5pm, then same policy). Except that now points are deducted from your project grade.

The statement of choice due on March 27th is worth a smaller number of points but will result in a zero on the entire final project if you don't turn it in on time.

The weekly status reports should be 2-3 pages long and discuss the work you have done that week on the project. They are equally weighted and worth 1/3rd of the project grade in total (i.e. 10% of your final grade). This makes them worth about "one homework" homework.

The final project should be 15-20 pages, single spaced, in a readable typeset manuscript. It should describe what you did, why you did it, and what your results mean or say about your ideas. I like to think of a course report like this as equivalent to "1/3rd" of a research paper. The difference is that you should describe everything you did in the course project, whereas you'd only describe the successful ideas in a paper.

You may collaborate with one, and only one,

other person on this project and submit a joint

writeup. You must identify this collaborator in

your selection of the project on March 27.

You may not share code, ideas,

or discuss with any other project group outside

of your partner. (Note that collaboration is

encouraged -- there is no difference between

grading collaborative projects and individual

projects.)

There are three choices for the project.

Project 1: Optimization on the surface of the (hyper)-sphere

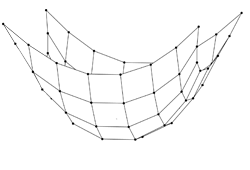

A classic problem in physics is: how do charged particles distributed themselves on the surface of the sphere? The case for a 2-sphere -- a circle -- is simple, the particles appear at equally spaced points. However, for the 3-sphere (the standard sphere), there is no "closed form" solution, and indeed, little to go on besides a few well understood special cases. Finding the global minimum is a particularly tough problem known as the Thompson problem. While the Thompson problem uses the energy term: you should look at the "easier" energy: as well as study solutions on higher dimensional spheres. Consequently, the optimization problem is:

Dimensions: is the dimension of the sphere (k = 3, is the standard sphere. is the number of points. Consequently, there are variables.

In this project, you will study the Thompson problem, as well as the question of: how does a graph embed on a sphere of hypersphere. This problem occurs in a reformulation of the celebrated Goemanns-Williamson SDP-based MAXCUT approximation algorithm as a low-rank SDP. The low-rank SDP is really just an optimization problem on the surface of the sphere. See fixing two weakenesses of the spectral method for an example of why this is interesting. Also seek some notes that I wrote out this http://www.cs.purdue.edu/homes/dgleich/publications/Gleich%202006%20-%20wom.pdf (Section 4.3).

For these problems, we may also need the additional constraint that at least some of the points are distinct. This usually corresponds to a constraint such as .

Both of these problems have the following type of objective function: Each represents the coordinates of the h charge or node in the graph. As a shorthand, we can place all of these variables into a matrix as follows: Using this shorthand, note that the objective and constraint are now:

Possible project approaches:

1) Focus on large scale objectives

2) Focus on highly accurate solutions

3) Focus on convex reformulations

4) Focus on solving a sequence of relaxed constraints

5) Focus on a large number of nodes/molecules

6) Focus on high dimensional problems, n ~ k, and k large.

7) Investigate optimization software on the full constrained problem.

8) Studying the local minima computed via different typed of structured initial conditions.

9) Trying to find the best points possible.

10) Formulate a homotopy method or a multi-resolution approach for the problem.

11) Study augmented Lagrangian methods.

Your goal is to say SOMETHING about the project. That is, you must go beyond just using an optimization software package to solve the objectives (although, that's "good enough" for a B.)

Project 2: An interior point LP solver

Throughout the class, we've stressed (when possible) some of the details that make real optimization software run. In this project, you'll be responsible for implemeting the most robust interior point LP solver you can.

You should read about interior point solvers in Primal-dual interior-point methods by Stephen J. Wright, SIAM 1997.

You must do this in Julia. (If you don't think this will be appropriate, let me know and we can discuss alternatives, but you must do so by April 10th.)

The interface I will evaluate is:

using MatrixDepot, SparseArrays

mutable struct IplpSolution

x::Vector{Float64} # the solution vector

flag::Bool # a true/false flag indicating convergence or not

cs::Vector{Float64} # the objective vector in standard form

As::SparseMatrixCSC{Float64} # the constraint matrix in standard form

bs::Vector{Float64} # the right hand side (b) in standard form

xs::Vector{Float64} # the solution in standard form

lam::Vector{Float64} # the solution lambda in standard form

s::Vector{Float64} # the solution s in standard form

end

mutable struct IplpProblem

c::Vector{Float64}

A::SparseMatrixCSC{Float64}

b::Vector{Float64}

lo::Vector{Float64}

hi::Vector{Float64}

end

function convert_matrixdepot(P::MatrixDepot.MatrixDescriptor)

# key_base = sort(collect(keys(mmmeta)))[1]

return IplpProblem(

vec(P.c), P.A, vec(P.b), vec(P.lo), vec(P.hi))

end

"""

soln = iplp(Problem,tol) solves the linear program:

minimize c'*x where Ax = b and lo <= x <= hi

where the variables are stored in the following struct:

Problem.A

Problem.c

Problem.b

Problem.lo

Problem.hi

and the IplpSolution contains fields

[x,flag,cs,As,bs,xs,lam,s]

which are interpreted as

a flag indicating whether or not the

solution succeeded (flag = true => success and flag = false => failure),

along with the solution for the problem converted to standard form (xs):

minimize cs'*xs where As*xs = bs and 0 <= xs

and the associated Lagrange multipliers (lam, s).

This solves the problem up to

the duality measure (xs'*s)/n <= tol and the normalized residual

norm([As'*lam + s - cs; As*xs - bs; xs.*s])/norm([bs;cs]) <= tol

and fails if this takes more than maxit iterations.

"""

function iplp(Problem, tol; maxit=100)

end

There is less flexibility here, but two focuses are:

1) Focus on robustness (solve all the problems)

2) Focus on speed (don't solve everything, but be very fast when you do solve it.)

The test problems all come from the University of Flordia Sparse Matrix repository: https://sparse.tamu.edu/LPnetlib

- 'afiro','brandy','fit1d','adlittle','agg','ganges','stocfor1', '25fv47', 'chemcom'

These matrices can be downloaded using the MatrixDepot.jl package

Specific requirements

Your code must be written in the spirit of reproducible research.

The final evaluation will take into account my ability to download

and run your code. Also, I will evaluate it on additional

problems from the LPnetlib collection and compare the solutions

against the Clp simplex code.

Project X: Your own research

These projects are discouraged, but if there is a natural fit between your research and the objectives of the class, you may propose a project. In your proposal (to me), you must detail what you plan to do, how it compares with Project 1 and 2.

No collaboration will be allowed on projects on your own research.