The Art of Software Testing

Chapter 5: Module (Unit) Testing

Glen Myers

Wiley

Third Edition, 2012

In this chapter we consider an initial step in structuring the testing of a large

program -- Module (Unit) Testing

Module testing (or unit testing) is the process of testing the individual

classes, methods, functions, or procedures in a program

Testing is first

focused on the smaller building blocks of the program

Unit testing eases the task of debugging

(the process of pinpointing and correcting a discovered error), since, when

an error is found, it is known to exist in a particular module

There may be an opportunity to test multiple modules simultaneously

Purpose of module testing is to compare the function of a module to

the functional requirements that define the module

Test-Case Design

You need two types of information when designing test cases for a module

test: requirements of the module and the module's source code

Module testing is largely white-box oriented

As you

test larger entities, such as entire programs, white-box testing becomes less feasible

The test-case design procedure for a module test is

the following:

Analyze the module's logic using one or more of the white-box methods,

and then supplement these test cases by applying black-box

methods to the module's specification

First step

is to list the conditional decisions in the program

Be sure to use Multiple Condition Coverage, Equivalence Classes, Boundary Value Analysis, and Error Guessing

Incremental Testing

Should you test a program

by testing each module independently and then by combining the modules to

form the program (nonincremental or big-bang testing)?

Or should you combine the next module to be tested

with the set of previously-tested modules (incremental testing or integration testing)?

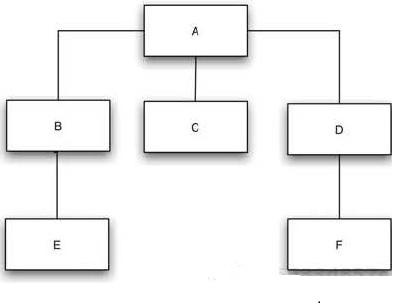

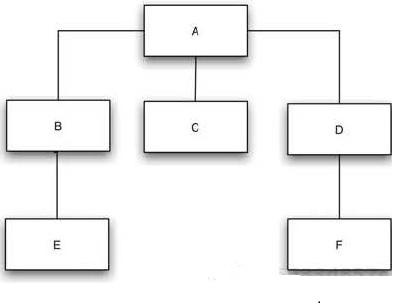

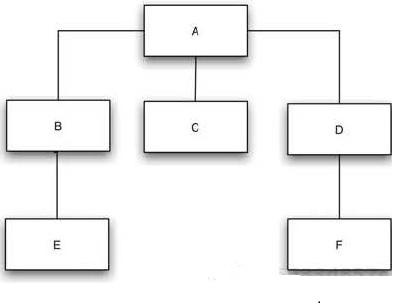

Figure 5.7 is an example

Lines

connecting the modules represent the control hierarchy of the program

Nonincremental testing is performed in the following manner...

First, a module test is performed on each of the six

modules, testing each module as a stand-alone entity

Modules might

be tested at the same time or in succession

The testing of each module can require a special driver module and one or

more stub modules

B requires a driver for A and a stub for E

When the module testing of all six modules has been completed, the

modules are combined to form the program

Alternative approach is incremental testing

Rather than testing

each module in isolation, the next module to be tested is first combined

with the set of modules that have been tested already

Bottom Up:

Test modules E, C, and F, either in parallel (by three

people) or serially

Next step is to test B and D; but rather than testing them

in isolation, they are combined with modules E and F

Test module A

Need drivers

Top Down:

Test module A

Test modules B, C, D

Test modules E, F

Need stubs

Nonincremental testing requires more work (more drivers and stubs)

Less work is

required for incremental testing

because previously-tested modules are used instead of the

driver modules (if you start from the top) or stub modules (if you start

from the bottom) needed in the nonincremental approach

Programming errors related to mismatching interfaces or incorrect

assumptions among modules will be detected earlier when incremental

testing is used

Debugging should be easier if incremental testing is used because you can isolate the source of the defect more easily

Incremental testing usually results in more thorough testing

Modules are tested multiple times (for example, B in Bottom Up testing)

Incremental testing is superior

Top-Down Testing

Production of stub

modules is often misunderstood

If the stub simply

returns control or writes an error message without returning a meaningful

result, module A will fail, not because of an error in A, but because of a failure

of the stub to simulate the corresponding module

Moreover, returning a

"wired-in" output from a stub module is often insufficient

The production of

stubs is not a trivial task

Modules can be tested as soon as their calling module is tested

So, there are usually multiple choices of which module to test next

If there are critical modules, test these

as early (and often!) as possible

A "critical module" might be a complex module, a module with a

new algorithm, or a module suspected to be error prone

I/0 modules should be tested as early (and often) as

possible

These can be used to provide test data and test results for all the other modules

Also, makes it possible to demonstrate the program to the user

Software engineers occasionally feel that the top-down strategy can be

overlapped with the program's design phase

BUT, when we are designing the lower levels of a

program's structure, we may discover desirable changes or improvements

to the upper levels

If the upper levels have already been coded and tested,

the desirable improvements will most likely be discarded, an unwise decision

in the long run

Bottom-Up Testing

Bottom-up strategy begins with the terminal modules in the program

(the modules that do not call other modules)

A module can be tested as soon as all the modules it calls are tested

So, there are usually multiple choices of which module to test next

In most cases, driver modules are easier

to produce than stub modules

If there are critical modules, test these

as early (and often!) as possible

A drawback of the bottom-up strategy is that

the working program does not exist until

the last module (module A) is added, and this working program is the complete

program

BUT, we can't make the unwise decision to overlap design and testing, since the

bottom-up test cannot begin until the bottom of the program has been designed

Bottom-up strategy usually favored in industry

Performing the Test

When a test case produces a situation where the module's actual results

do not match the expected results, there are two possible explanations:

(1) The module contains an error

(2) The expected results are incorrect

A precise definition of

the expected result is a necessary part of a test case

When executing a test,

remember to look for side effects (instances where a module does something

it is not supposed to do)

Use of automated test tools can minimize part of the drudgery of the

testing process

Psychological problems associated with a person attempting to test

his or her own programs apply as well to module testing

Rather than testing

their own modules, programmers might swap them

More specifically,

the programmer of the calling module is always a good candidate to test

the called module

Note that this applies only to testing; the debugging of

a module always should be performed by the original programmer

Test Planning and Control

Project management challenge in planning, monitoring, and controlling

the testing process

Major mistake most often made in planning

a testing process is the tacit assumption that no errors will be found

Obvious result of this mistake is that the planned resources (people,

calendar time, and computer time) will be grossly underestimated -- a notorious

problem in the computing industry

People who will design, write,

execute, and verify test cases, and the people who will repair discovered

errors, should be identified

Define mechanisms for reporting detected

errors, tracking the progress of corrections, and adding the

corrections to the system

Test Completion Criteria

Criteria must be designed to specify when each

testing phase will be judged to be complete

It is unreasonable to expect that all errors will eventually be detected

The two most common criteria are these:

l. Stop when the scheduled time for testing expires

(Can satisfy this by doing absolutely nothing!)

2. Stop when all the test cases execute without detecting errors -- that is,

stop when the test cases are unsuccessful

(Subconsciously encourages you to write test cases that have a low

probability of detecting errors)

Since the goal of testing

is to find errors, why not make the completion criterion the detection

of some predefined number of errors?

You might state that a

module test of a particular module is not complete until three errors have

been discovered

Number of

errors that exist in typical programs at the time that coding is completed

(before a code walkthrough or inspection is employed) is approximately 4

to 8 errors per 100 program statements

This would say that a 2500-line program would contain 100-200 defects

Best practice is to continue testing until discovery of new defects drops significantly

Independent Testing

Advantages usually noted are...

1. Increased motivation in the testing process

2. Healthy competition with the development organization

3. Removal of the testing process from under the management control of the development organization

4. Advantages of specialized knowledge that independent testers bring to bear on the problem

Regression Testing Procedure

- Re-run the test case(s) that revealed the defect(s)

- Re-run test cases that are related to the defect(s)

- Re-run test cases that might logically be affected by the changes that were made to fix the defect(s)

- Re-run a random subset of all test cases ...

which will include many that should have no relation to the defect(s) or the changes that were made