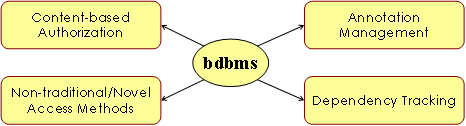

bdbms: Overview

Management of scientific data represents the backbone of life sciences discoveries. Current database technology has not kept pace with the proliferation and specific requirements of scientific data management. In fact, the limited ability of database engines to furnish the needed functionalities to manage and process scientific data properly has become a serious impediment to scientific progress.bdbms is a prototype database system for supporting and processing scientific data. While there are several functionalities of interest, our current focus is on the following key features: (1) Annotation and provenance management, (2) Dependency tracking, (3) Update authorization, and (4) Non-traditional and novel access methods.

Annotation Management:

Annotations usually represent users' comments, experiences, provenance (lineage) information, or related information that is not modeled by the database. Adding and retrieving annotations represent an important way of communication and interaction among database users. Annotations are treated as first-class objects inside bdbms. bdbms provides a framework that allows adding annotations at multiple granularities, i.e., table, tuple, column, and cell levels, storing and indexing annotations, archiving and restoring annotations, and propagating and querying annotations along with query results.

Dependency Tracking:

Scientific data are full of dependencies and derivations among data items. In many cases, these dependencies and derivations cannot be automatically computed using coded functions, e.g., stored procedures or functions inside the database. Instead, they may involve prediction tools, lab experiments, or instruments to derive the data. As a result, tracking such dependencies becomes a significant manpower and data quality and consistency concern in scientific databases. bdbms provides functionalities such as: (1) defining and modeling the dependencies among the data items, (2) tracking items in the database that are affected and need re-evaluation because of a certain modification, and (3) annotating query results to specify whether or not the query answer is up-to-date.

Update Authorization:

Changes over the database may have important consequences, and hence, they should be subject to authorization and approval by authorized entities before these changes become permanent in the database. Update authorization in current database management systems is based on GRANT/REVOKE access models. Although widely acceptable, these authorization models are based only on the identity of the user not on the content of the data being inserted or updated. In scientific databases, it is often the case that the authorization or approval is based not only on the identity of the user but also on the content of the data being inserted or updated. In bdbms, we introduce an approval mechanism, termed Content-based Authorization, that allows the database to systematically track the changes over the database and allow authorized entities, e.g., database administrators, to approve or deny transactions based on the content of the modified data.

Indexing and Query Processing:

Scientific databases warrant the use of non-traditional and novel indexing mechanisms beyond B+-trees and hash tables. In bdbms, we integrate non-traditional indexing techniques such as the SP-GiST and SBC-Tree index structures. SP-GiST is an extensible indexing framework that broadens the class of supported indexes to include disk-based versions of space-partitioning trees, e.g., disk-based trie variants, quadtree variants, and kd-trees. As an extensible indexing framework, SP-GiST allows developers to instantiate a variety of index structures in an efficient way through pluggable modules and without modifying the database engine. The SBC-Tree is an index structure for indexing and searching RLE-compressed sequences of arbitrary length. It allows storing and operating on the compressed sequences without decompressing them. Operating over compressed data is proven to improve the overall system performance as it reduces the memory requirements, the buffer size, and the number of I/Os needed to retrieve the data.